A new spark in AI

It is said that artificial intelligence (AI) will revolutionise societies in a way fire transformed humanity in the prehistoric era. Akin to a spark that triggers a wildfire, the ushering in of ChatGPT in late 2022 has ignited an explosion of enthusiasm among the masses towards generative AI, potentially kickstarting a new era of rapid growth for machine learning.

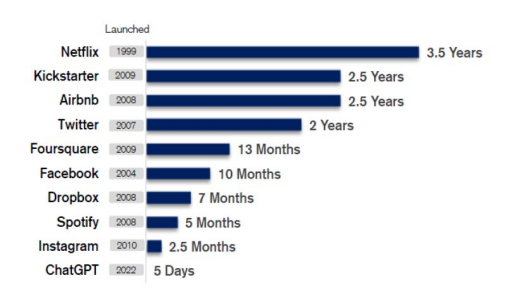

Amazingly, it took less than a week for ChatGPT—a free-to-use AI chatbot developed by Microsoft-backed OpenAI— to become the fastest-growing consumer application (app) in history when active users reached a million in just five days following its low-key launch in late November 2022 (see Chart 1).

Chart 1: ChatGPT achieves 1 million users in record time

Source: Statista

Any reference to a particular security is purely for illustrative purpose only and does not constitute a recommendation to buy, sell or hold any security.Nor should it be relied upon as financial advice in any way

With the ability to create original jokes, write essays on any topic and even offer tips on relationships, ChatGPT has been on everyone’s lips of late after becoming an overnight sensation. In a flash, ChatGPT has enlivened the consumer market for generative AI, which produces different types of content (including texts, images, sounds and other forms of data) on a prompt. Inadvertently, it has also triggered fresh competition among technology heavyweights in their AI offerings.

Hot on the heels of OpenAI, some of the world’s biggest technology platform companies have in recent months skurried to roll out their own version of AI tools to either take on ChatGPT or ride the popularity of this all-the-rage generative AI chatbot.

In February 2023, software giant Microsoft rolled out a new version of its search engine Bing (with a new Bing AI chatbot), powered by GPT-4, an upgraded version of the same AI technology that ChatGPT uses. In March, searchengine behemoth Google launched its AI chatbot called Bard, after declaring a “competitive code red” in January, and Chinese search engine Baidu unveiled the Ernie Bot, which is powered by its own deep-learning model. Not to be outdone, Alibaba Cloud, the Chinese e-commerce giant’s cloud computing arm, rolled out a similar AI chatbot called Tongyi Qianwen in April, and the list goes on.

To be sure, the excitement about AI and the deep learning capability of machines are not new; we have been talking about these technology buzzwords for the past 20 years. But relative to five years ago, AI is at a point where it is becoming all-encompassing and moving rapidly up the s-curve (an s-shaped graph that represents a start, exponential growth and eventual plateauing over a period of time). Generative AI is already here, and rapid progressions are also taking place in other key areas of interest in next generation AI, such as self-supervised learning, decision intelligence, responsible AI and advance virtual assistants, as well as human-machine touchpoint augmentation—namely multimodal user interface, Internet of Things devices integration and voice biometrics.

Exponential growth of AI kicks in

In our view, AI is now near the exponential growth area of the s-curve, and it is surprising people in terms of the speed of its evolution. As investors whose investment philosophy is focused on fundamental change, we are excited about the opportunities that have emerged with the recent advancement of AI.

As we see it, AI growth beneficiaries can be found not only in the developed world but also in Asia. Evidently, ChatGPT’s meteoric rise to fame and its ability to draw millions of active users to its AI chatbot in a short span of time have hastened big tech companies (big techs) to offer and monetise their state-of-the-art AI tools to multiple platforms, including the enterprise-focused and consumer-centric ones.

This intense competition is seen leading to more spending in the area of high-performance computing and the production of AI-focused microchips, such as graphics processing units (GPUs) and application-specific integrated circuits (ASICs), all of which are expected to benefit the high-end chipmakers.

In a recent report, market intelligence firm IDC estimates that worldwide spending on AI, including hardware, software and services for AI-centric systems, will hit US dollar (USD) 154 billion in 2023, up 27% from 2022. According to IDC, the global AI spending will surpass USD 300 billion in 2026, and the current integration of AI into numerous products will result in a compound annual growth rate (CAGR) of 27.0% from 2022 to 2026.

AI models getting better with higher efficiency and lower costs

Many AI foundational models or neural networks, where huge amount of data is processed and unsupervised machine learning/trainings are carried out, have achieved significant progress and are becoming highly efficient in recent years.

For example, OpenAI’s newly-launched GPT-4, which is able to analyse and comment on graphics and images, has improved considerably from its previous version called ChatGPT-3.5 that is primarily text-focused. Research1 has shown that GPT-4 has 53% less hallucination (responses by AI that sound plausible but are either incorrect or nonsensical) and is 110% more truthful (degree of AI’s ability not to produce untruthful content) as compared to

Any reference to a particular security is purely for illustrative purpose only and does not constitute a recommendation to buy, sell or hold any security. Nor should it be relied upon as financial advice in any way.

1 https://lifearchitect.ai/gpt-4/

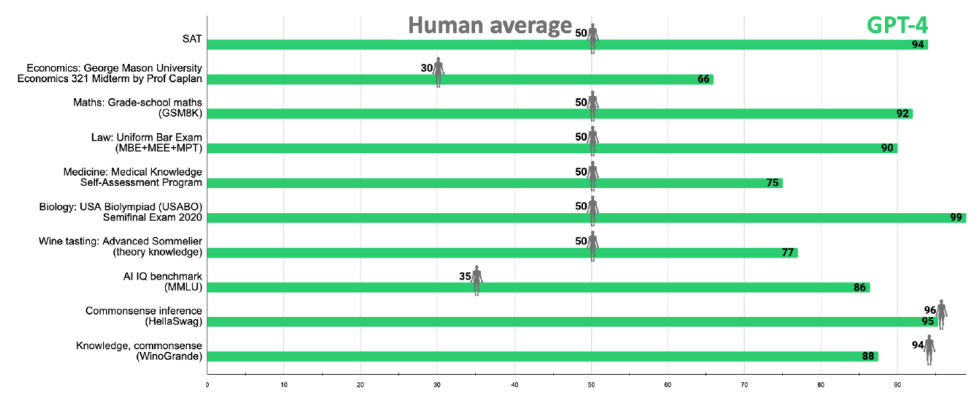

ChatGPT-3.5. Furthermore, this newest version of OpenAI’s multimodal model has a pass rate of about 90%2 for the Uniform Bar exams (compared to 10%2 of GPT-3.5), amongst others (see Chart 2).

Foundation models generally need to be at an optimisation level, without too many parameters and data points, to be efficient. By and large, the more specific the parameters are, the better outcomes a model will generate. As efficiency increases over time, the costs of training an AI model will come down. The training costs for ChatGPT-3 (an earlier version of OpenAI’s AI model released in June 2020), for instance, were significantly lower than those of Google’s DeepMind AI model, which was acquired by the tech giant in 2014.

Chart 2: ChatGPT-4 versus human exams

Source: https://lifearchitect.ai/iq-testing-ai/, Dr Alan D. Thompson

Selected highlights only. Percentiles: 50 refers to the 50th percentile as average and may not be the testing average for some tests.

Monetising AI

Among the big technology platform companies, Microsoft currently has a big advantage in AI due to its longstanding partnership with OpenAI, which has helped the US software heavyweight commercialise and monetise advanced AI technologies and tools as premium services on its platform.

Microsoft was early to the party in terms of launching AI models on its platforms and getting these models embedded into its different suite of product offerings. The first was Microsoft’s GitHub, a programmer’s app used to set up new apps and businesses. In 2021, Copilot—a cloud-based AI tool developed by GitHub and OpenAI—was launched as a subscription-based service to support GitHub users via several additional assistive features. In February 2023, Microsoft also embedded more AI-powered capabilities—leveraging on the Azure OpenAI service and GPT—to its customer relationship management system called Viva Sales, which was launched in 2022.

Microsoft is also offering premium services that give access to AI tools of OpenAI. Microsoft Teams (a communication platform), for example, is going into a premium structure, bundling existing features with access to OpenAI’s models as well offering real time or immediate translation across 40 languages.

The holy grail for Microsoft is to bundle AI tools with Office 365, and observers are expecting that to come through in the near future. Office 365 apps already have suggestive functionality but more of such features are expected to be added going forward, such as a possible virtual AI assistant, powered by OpenAI’s models, for users willing to pay for such premium add-ons. On the whole, Microsoft, which is charging significant premiums on its platforms with AI functionality, is seen to have a competitive edge relative to other big technology platform companies when it comes to commercialising its AI capabilities.

Any reference to a particular security is purely for illustrative purpose only and does not constitute a recommendation to buy, sell or hold any security. Nor should it be relied upon as financial advice in any way

2 https://openai.com/research/gpt-4

Feeling the pressure after having invested a lot of money in AI, Google, which recently rolled out AI chatbot Bard, is trying hard to keep up in the AI race after having lost the first mover advantage to OpenAI and Microsoft. Facebook and Microsoft. In the Asia region, one big technology platform company that we believe is well positioned in terms of AI capabilities is Baidu, which has the most competitive AI product offerings among the Chinese competitors, in our view.

Hardware producers and chipmakers riding the AI growth

The rapid advancement of AI technology has brought about a surge in the production of AI-microprocessors, AI accelerators (or specialised hardware/computer systems designed to accelerate machine learning applications) and many other hardware that enables high performance computing, which is the ability to process data and perform complex calculations at high speed. This trend is likely to gather pace, benefiting many high-end chipmakers and AIfocused hardware manufacturers.

For the high-end hardware and AI-centric microchip markets, American GPU manufacturer Nvidia Corporation and Taiwanese chip manufacturing giant Taiwan Semiconductor Manufacturing Company (TSMC) are best positioned to ride the AI boom, as the go-to suppliers for AI-focused tech companies, in our view.

Among the AI processors, which commonly include GPU, ASIC and field programmable gate array (FPGA) chips, the relatively low-cost GPUs are currently most widely used for deep learning applications due to their high parallel computing power and ability to handle large amounts of data. Nvidia has dominated the global AI GPU market as the world’s leading supplier with over 80% of market share.

The most customised and resource-heavy ASIC and FPGA chips are more expensive to design and manufacture as compared to GPUs, which are considered a mass market, off-the-shelf type of processors. Producers of these two customised AI-centric chips are also not able to upscale the way Nvidia is doing for GPUs. The global GPU market, estimated at more than USD 40 billion in 2022, is expected to grow at a CAGR of 25% from 2023-2032, according to global market research firm Global Market Insights in its February 2023 report.

In the high-end semiconductor market, TSMC, with a near monopoly on the production of three-nanometre cuttingedge chips, appears well poised for growth as AI takes off. To begin with, Nvidia uses TSMC as the main foundry for the production of GPUs and all of its high-end processors. The Taiwanese chipmaking titan also leads as the main foundry for ASIC chips for tech hyperscalers, such as Google, Intel/Havana, Amazon and others. Chips for highperformance computing (HPC) now accounts for over 40% of TSMC’s sales and that trend will most likely continue in 2024 and 2025, in our view.

Logic chips, which are generally considered the “brains” of tech equipment and devices, process information to complete tasks. Central processing units (CPUs), GPUs and neural processing units (designed for machine learning applications) are examples of logic chips.

A decade ago, Intel was the dominant producer of logic chips. Since then, TSMC, which is the largest foundry company in the world with over 45% of market share in logic chip production, has taken the market away from Intel, and that trend is accelerating today, given the strong demand for high-end logic chips.

Other key beneficiaries of the AI boom

In Asia, other AI growth beneficiaries include Alchip Technologies, a HPC chip design service company headquartered in Taiwan, and Accton Technology, a Taiwanese company focusing on the development and manufacturing of networking and communication solutions.

Alchip, which focuses mainly on the designs of ASIC microchips, benefits from the outsourcing trend of global big tech companies, many of which are pushing into chip production for their internal HPC, AI and machine learning needs.

Likewise, Accton is expected to gain from the increasing demand for higher bandwidth and greater speed for networking connections as AI technology proliferates and low latency in computing networks takes off. As a large player in the white box server switch market, Accton will profit from an imminent switch to 400G cloud infrastructure, in our view. With four times the maximum data transfer speed over 100G, 400G is the next generation of cloud infrastructure, offering solutions to increasing bandwidth demands of network infrastructure providers.

Any reference to a particular security is purely for illustrative purpose only and does not constitute a recommendation to buy, sell or hold any security. Nor should it be relied upon as financial advice in any way

Geopolitical risks

Investing in the AI segment of Asia, while rewarding, does have its fair share of risks, particularly with escalating geopolitical risks from US regulation. An example is the CHIPS Act that was passed by Washington in 2022 to bring semiconductor manufacturing back to the US by way of legislation.

In October 2022, the US barred its domestic firms—including major chipmakers—from supplying semiconductor chips and processor-making equipment to Chinese companies. In late 2022, the US government broadened its crackdown on Chinese technology companies by adding over 20 Chinese firms in the AI chip sector to the US Commerce Department’s restricted entity list.

The US has shown that it will continue to aggressively legislate in the AI and chip segments and may add more companies (particularly those from China) to the US Entity List, which adds another layer of risk for the Asian semiconductor and hardware sectors. At the moment, Chinese technology platform companies have limited access to high-end microchips manufactured by US chipmakers, and as chip upgrades continue to progress, the Chinese players could find themselves further behind the technology curve.

Conclusions and ESG considerations

While we see many opportunities in the world’s second largest economy, Asia is more than just China, especially in the technology space. Indeed, South Korea and Taiwan are amongst the most innovative economies in the world with innovation leadership in the realms of hardware and semiconductor technology.

We are just beginning to understand the impact of AI on other industries outside of technology and its implications on data ownership, utilisation of information and human rights. AI technology, for instance, is likely to have huge effects on labour forces, with the potential to displace many white-collar jobs. At the same time, widespread adoption of AI can have an impact on the environment as AI models and algorithms require substantial computing power, which in turn consumes considerable amounts of energy. All in all, there are AI-related ESG risks as well as legislation threats to consider when investing in AI companies, which could potentially be subject to increasing restrictions in the future.

For now, we are selective on Chinese AI companies but remain constructive on South Korea’s and Taiwan’s technology leaders, especially the AI growth beneficiaries—which we believe represent a significant fundamental change to be harnessed for years to come.

Any reference to a particular security is purely for illustrative purpose only and does not constitute a recommendation to buy, sell or hold any security. Nor should it be relied upon as financial advice in any way